Ruiqi Wang

Ph.D. student at Computer Science & Engineering @ WashU.

I am member of the AI for Health Institute and Cyber-Physical Systems Laboratory. I earned my BSE degree in ECE and CE from University of Michigan, Ann Arbor and Shanghai Jiao Tong University (SJTU, 上海交通大学). I am a recipient of the 2025 Google PhD Fellowship.

My research lies at the intersection of Machine Learning Systems, Embedded Systems, Computer Vision, and Human Action Recognition, with a focus on impactful real-world applications.

-

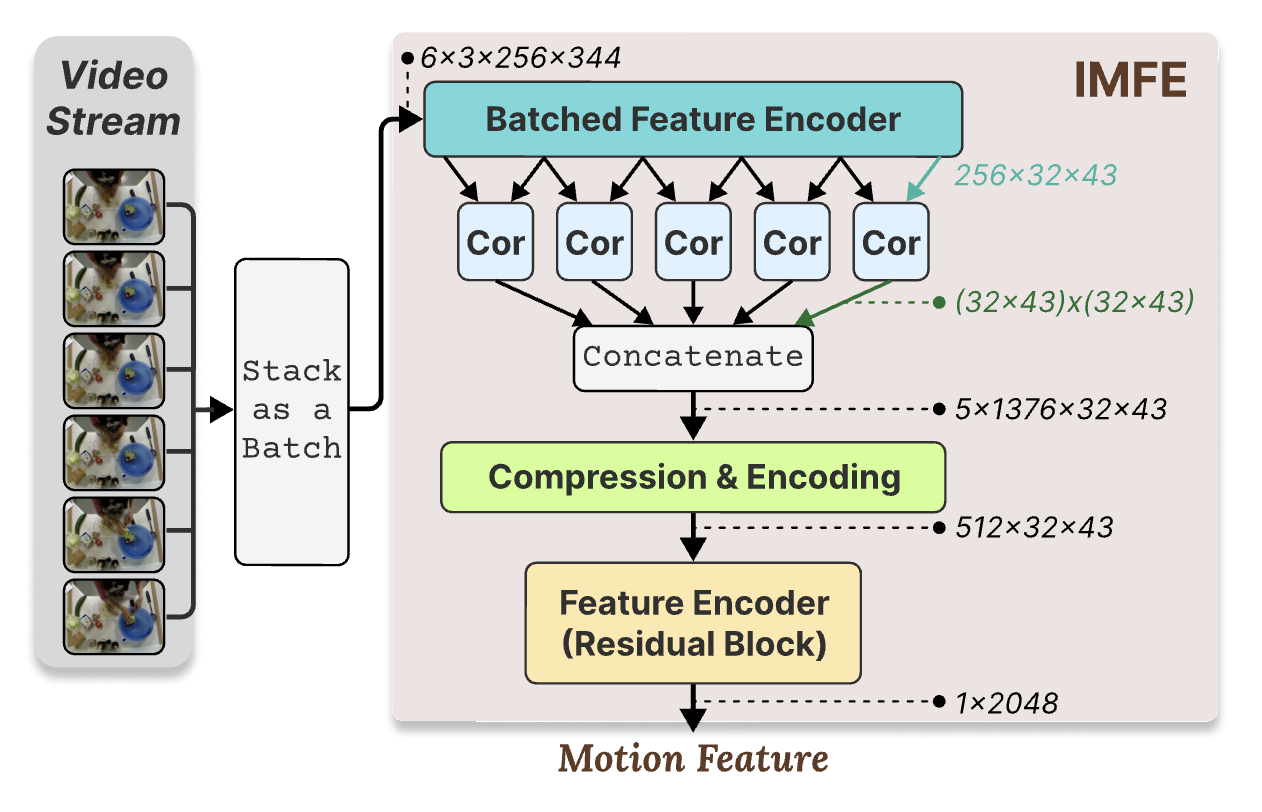

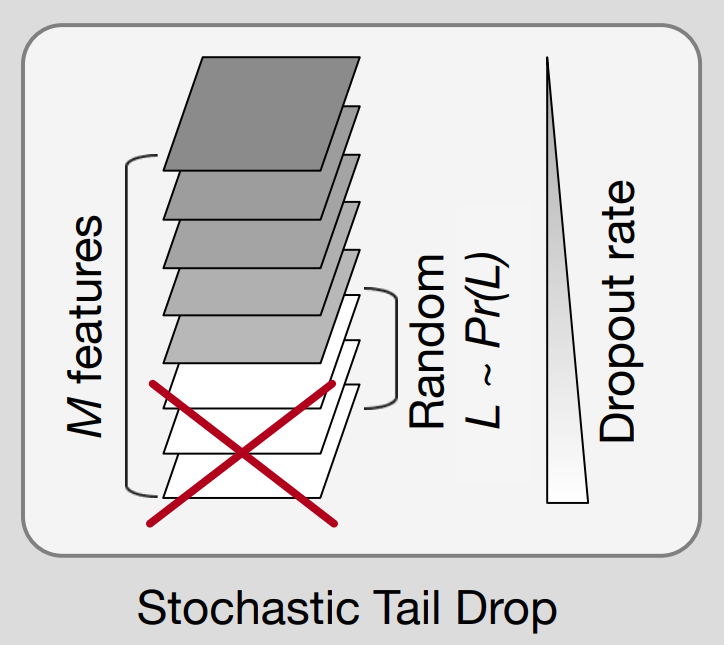

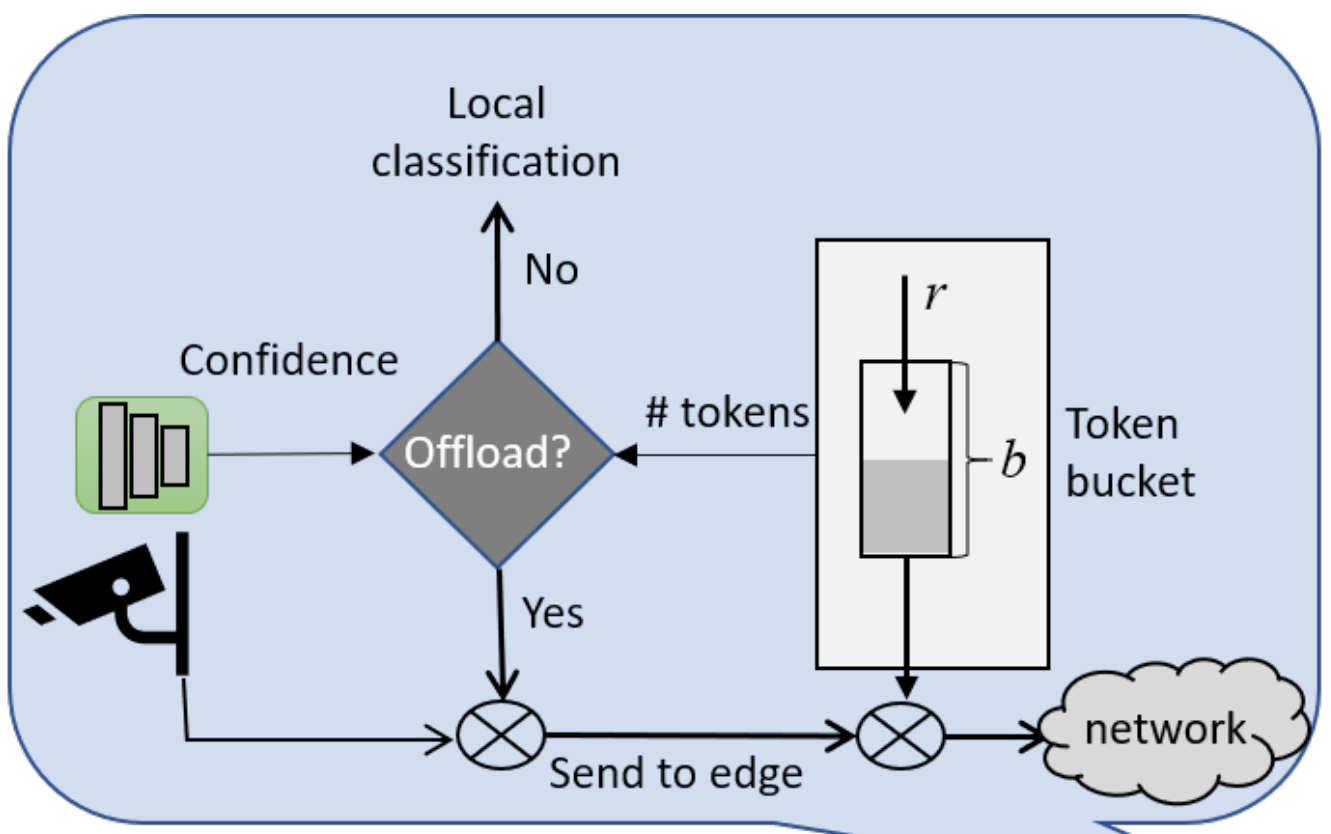

In Embedded Systems and Edge Computing, I develop efficient algorithms for machine learning inference on resource-constrained devices, addressing complex tasks like image classification and video-based action recognition. My work includes optimizing offloading strategies to balance performance under strict deadlines and limited resources, ensuring high accuracy and low latency in real-time systems.

-

In AI for Health, my Smart Kitchen project uses computer vision and action recognition to help individuals with cognitive impairments by detecting and correcting action sequencing errors in daily tasks like cooking. I also apply deep learning and computer vision to identify blood cancers from microscopic images, improving diagnostic accuracy.

Through these efforts, I aim to develop cutting-edge, deployable solutions for smart environments and healthcare, leveraging embedded systems and AI to make meaningful societal contributions. One of my projects earning the Best Student Paper Award at the IEEE Real-Time Systems Symposium (RTSS ‘23).

news

| Dec 05, 2025 | 📄 My work with Jingwen Zhang, PhD (First Author), Addressing Cohort Variability with Adaptive Fusion of Wearable and Clinical Data: A Case Study in Predicting Pancreatic Surgery Outcomes, has been accepted in ACM Transactions on Computing for Healthcare (HEALTH). Paper Summary

|

|---|---|

| Oct 23, 2025 | 🏆 I have received the 2025 Google PhD Fellowship, recognizing and supporting my research on AI for health. The news was also featured by WashU Engineering. |

| Sep 18, 2025 | 📄 CHEF-VL: Detecting Cognitive Sequencing Errors in Cooking with Vision-Language Models, accepted to Proceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies and to be presented at UbiComp / ISWC 2026 in Oct. 2026 in Shanghai. Paper Summary

|

| Jul 12, 2025 | 📄 Real-time video-based human action recognition on embedded platforms, accepted to appear at ACM/IEEE EMSOFT @ ESWEEK 2025. |

| Jun 01, 2025 | 💼 Research Engineer Intern at Plus (May 2025 – Aug 2025, Santa Clara, CA): Vision-language model development for autonomous driving and curating datasets from real-world and synthetic sources. |

selected publications

- UbiComp 2026

CHEF-VL: Detecting Cognitive Sequencing Errors in Cooking with Vision-language ModelsProceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, Dec 2025

CHEF-VL: Detecting Cognitive Sequencing Errors in Cooking with Vision-language ModelsProceedings of the ACM on Interactive, Mobile, Wearable and Ubiquitous Technologies, Dec 2025